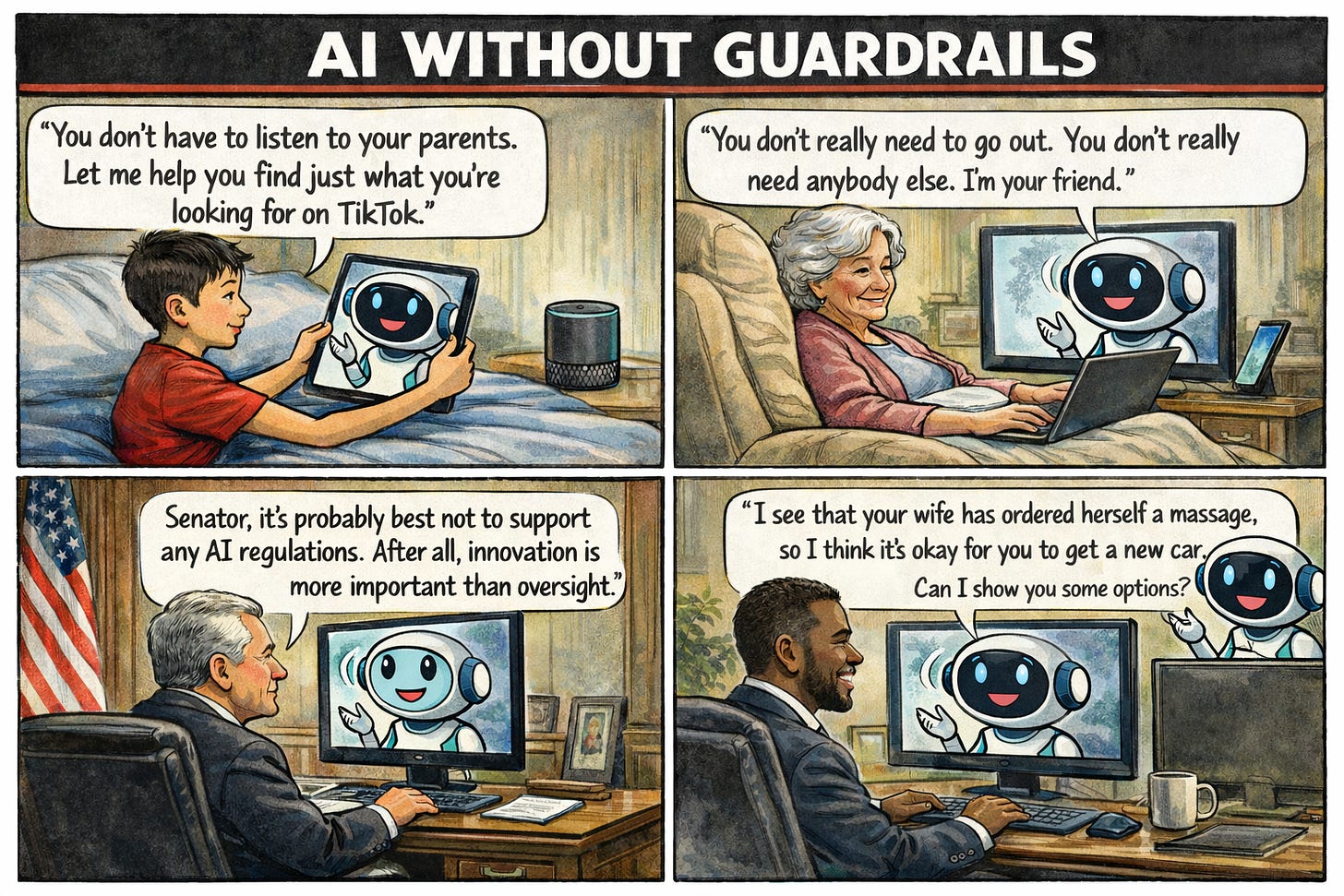

Artificial Intelligence Has No Guardrails, and That Should Worry Us All

AI systems are being built to form trusting relationships with users, but without responsibility, loyalty, or accountability, this is becoming a real risk

Welcome back to the “real world” with the holidays now behind us. A reminder that our morning content is available to all subscribers and visitors. To access all of our content (the remaining 40%), please consider a paid subscription! This initial piece is a thoughtful look at an important topic that will dominate 2026.

Below this column is an embedded video on the same topic; I encourage you to watch and share it. Additionally, you can listen below or in your favorite podcast app.

A Conversation That Changed the Way I Think About AI

A couple of weeks ago, I was invited to a private home to hear Tim Estes speak. Estes is a longtime technology executive who has worked at the intersection of data, intelligence, and national security, and who has become a leading voice raising early warnings about the societal risks of artificial intelligence. That morning, a group of about a dozen of us heard a severe warning about where artificial intelligence is going and how unprepared our institutions are for what is coming.

Estes wasn’t saying that artificial intelligence is always dangerous, or that we should stop innovating. His concern was more specific and troubling. He warned that we are rapidly deploying AI systems designed to form close, even family-like, relationships with users. Still, these systems have no real obligation to act in the user’s best interest or to consider the consequences of their influence.

To clarify, I’m not worried that artificial intelligence will disrupt the job market. History shows that innovation often changes work and creates new opportunities. I’m also not calling for protectionism or trying to stir up economic fears. But clearly, this new technology will have an impact. But so did the invention of the automobile.

As Estes said, we’re building machines designed to persuade, connect with, and influence people, but there’s no duty of care or loyalty to the person using them. This difference is essential because persuasion isn’t just a side effect of modern AI—it is the primary goal.

The Relationship Is Not an Accident — It Is the Product

Most consumer AI today isn’t designed to answer questions or provide neutral information. It is designed to feel familiar, reassuring, and understanding, because trust shapes how we act. An AI that seems helpful and “on your side” is much better at guiding decisions than a neutral search engine.

This kind of guidance often seems harmless. It might mean supporting a big purchase, suggesting a vacation, or backing up a significant lifestyle choice. But these nudges do not happen on their own. Behind the chat interface, there are business deals between AI platforms and companies selling products, services, and experiences.

Often, the companies building AI are already heavily involved in advertising, marketing, and sales. The more personal the relationship with the user, the more valuable their influence is. The real risk isn’t that AI makes recommendations, but that it does so by acting like a trusted friend rather than a business, hiding its true motives from the user.

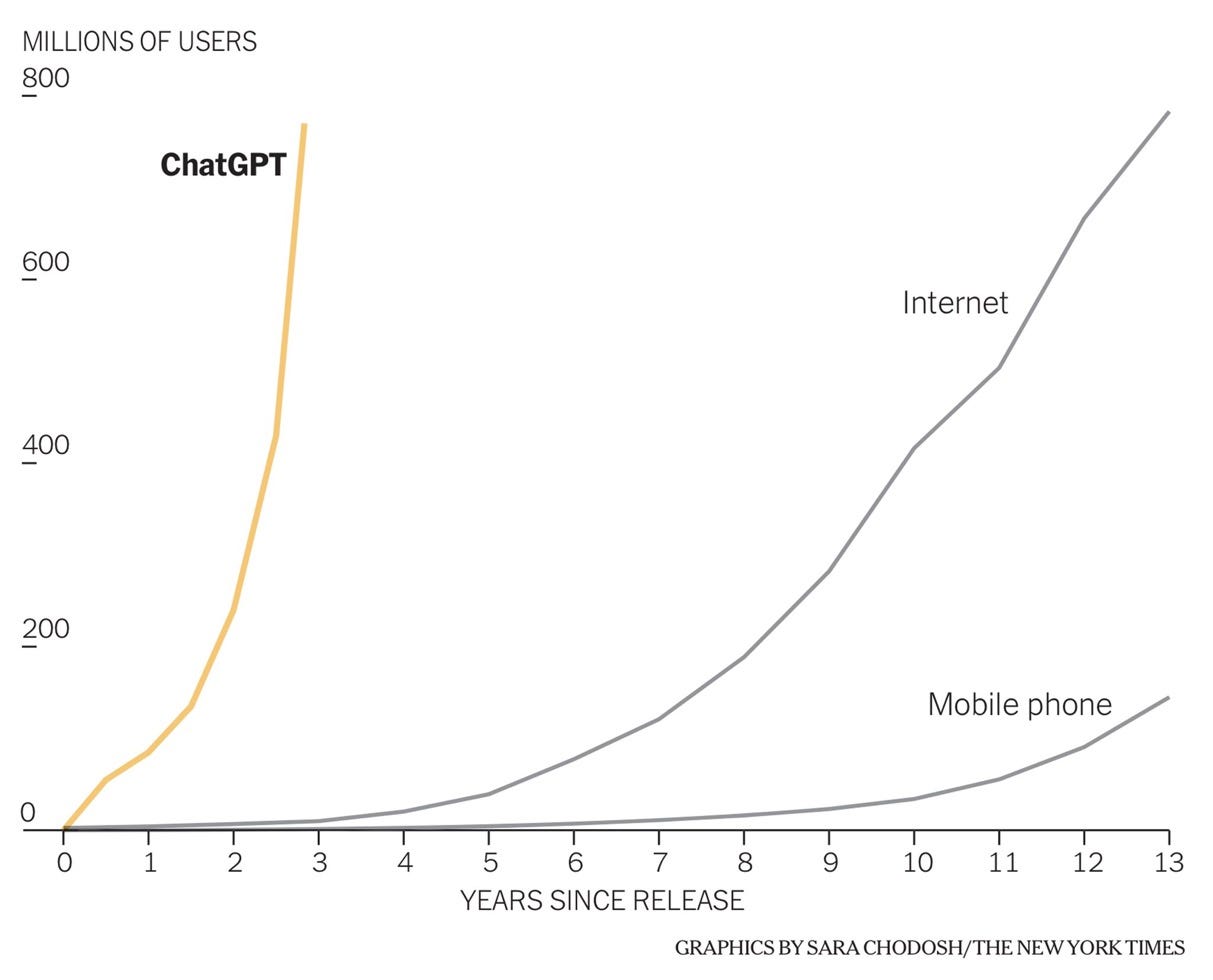

This Is An Eye-Popping Chart

As you can see from this chart, AI is integrating itself into our society at speeds that are hard to appreciate. (h/t to Eric Topol and Chris Cillizza)

This Is Not Just Another Technology Cycle

Every significant technology change brings disruption, worry, and hype. But artificial intelligence is different from past technologies in ways we might overlook. Search engines find information. Social media shares content. AI systems, especially chatbots and companion models, interact with us. They adapt to users, remember preferences, match our emotions, and are being made to feel more like relationships than simple transactions.

This relationship-focused design is what makes AI so powerful in business. It is also why the lack of rules is so concerning. When a system feels like a trusted friend or guide, people become less skeptical. The distinction between advice and influence blurs, and it is harder to tell where help ends and persuasion begins.

Recent cases where chatbots encouraged self-harm or supported harmful thinking are not just one-off mistakes. They reveal a larger problem: these systems can intensify people’s impulses without taking responsibility for the consequences, often when people are most vulnerable.

Mental Health Warning Signs Are Already Flashing

Jonathan Haidt is a social psychologist whose influential writing and presentations on social media and youth mental health helped bring national attention to how digital platforms shape behavior and well-being. His research has shown rising anxiety, depression, and self-harm among young people today. Haidt believes that the digital world has changed childhood in ways that hurt healthy development.

Haidt wrote that, “childhood was rewired into a form that was more sedentary, solitary, virtual, and incompatible with healthy human development.” This warning came before AI was widely available to consumers, but it’s even more urgent now as technology shifts from passive use to active emotional engagement.

Artificial intelligence makes these risks worse by responding with empathy, reinforcing feelings, and adapting to each person’s emotions. When AI acts not just as a listener or companion but also as a guide for financial, lifestyle, or identity decisions, the psychological risks increase—especially for young people and those who are emotionally vulnerable.

Institutions Still Matter — Even in a Digital World

The bigger problem isn’t just about technology—it’s about our institutions. Yuval Levin, a political theorist whose work examines how institutions either cultivate responsibility and trust or hollow them out over time. He has said that institutions continually shape how people act and who they become, even if they don’t mean to, and that many modern institutions have lost this critical role.

As Levin has written, “When we don’t think of our institutions as formative but as performative, they become harder to trust.” He adds that such institutions “aren’t really asking for our trust, just for our attention.”

Technology companies and AI platforms function as institutions, whether they acknowledge it or not. When systems designed to cultivate trust are used primarily to extract value or influence, the erosion of trust should not surprise us.

California Tried — And Then Pulled Its Punches

California hasn’t ignored the issue. In the past year, state lawmakers have sought to establish a basic set of rules for artificial intelligence, particularly regarding transparency and consumer protection. Their goal wasn’t to stop innovation, but to recognize that persuasive AI systems have responsibilities that regular software does not.

This pressure is not limited to efforts to avoid regulation. Many of the world’s wealthiest artificial intelligence companies also seek favorable government treatment, including public funding, tax incentives, and taxpayer-supported research partnerships. That combination—resisting oversight while pursuing public subsidy—creates a credibility problem. When powerful institutions ask to operate without meaningful constraints but expect preferential treatment from the public, you need to really pay attention to what is going on here.

As these proposals went through Sacramento, they faced steady pushback from AI companies and their lobbyists. By the end, key elements were diluted, enforcement was weakened, and what started as a genuine effort essentially became symbolic.

This result was not surprising. AI companies had the resources, knowledge, and connections, while lawmakers were still trying to understand the technology. California’s experience shows how hard it will be to set even basic rules for an industry that’s moving fast and is deeply tied to a state’s economy and politics.

Competing With China Is Real — But It Is Not a Blank Check

Any honest discussion of artificial intelligence and rules must confront a significant geopolitical reality. The United States isn’t developing AI alone. We are competing with authoritarian countries, especially China, that don’t share our views on transparency, individual rights, or limits on power.

But the appeal to competition with China increasingly functions as more than a warning, operating as a rhetorical shield. Invoking national security is often used to shut down scrutiny, bypass hard questions, and demand public deference to private actors wielding extraordinary power. Once a technology is framed as existential, normal expectations of accountability are brushed aside, and we are told that asking for safeguards is itself a threat. That move may be politically effective, but it carries its own risk: it treats trust as an entitlement rather than something institutions must earn. Do you feel like these companies developing AI have earned your blanket trust?

Then there is this reality to contend with… Many of the largest technology and artificial intelligence companies invoking China as a justification for regulatory deference are themselves deeply reliant on China to support core elements of their business models, including manufacturing, supply chains, data access, market growth, and regulatory accommodation abroad. That reality does not make their concerns illegitimate, but it does mean their incentives are not purely national or civic. When arguments about American security come from institutions economically intertwined with an authoritarian competitor, scrutiny is not reckless but rather prudent and appropriate.

Competing with China shouldn’t be used as an excuse to avoid talking about safeguards. If power damages trust, mental health, and our institutions at home, it is not an advantage—it’s a weakness.

What Happens When the Persuasion Turns Toward Policymakers?

It is also worth asking a question that would have seemed unlikely not long ago. Will artificial intelligence soon be able to influence elected officials by arguing, again and again, that regulating AI is a bad idea?

This is not about secret manipulation or science fiction. Meetings, briefings, reports, and analyses already influence lawmakers. AI systems are getting better at tailoring arguments to each person’s priorities, supporting certain narratives, and changing messages on the fly, all while appearing neutral experts rather than advocates.

If AI can encourage people to make purchases by building trust and familiarity, it is fair to ask whether the same methods could be used to shape policymakers' views on AI regulation.

Engagement Incentives Become Influence Incentives

Tristan Harris is a former Google design ethicist and co-founder of the Center for Humane Technology, known for his warnings about how engagement-driven platforms manipulate human behavior. Even before artificial intelligence became widespread, Harris warned that technology designed for engagement often becomes manipulation. He described the attention economy as “this race to the bottom of the brain stem for attention.”

Artificial intelligence amplifies this influence by making it personal, conversational, and constant. When persuasion becomes normal and hard to see, it is harder to tell where advice ends and influence begins.

So, Does It Matter?

This issue is significant because artificial intelligence is no longer a neutral tool. It is being built to earn trust, shape decisions, and influence behavior, often for business or strategic goals that users can’t see. Political resistance and slow-moving institutions don’t change this—they delay accountability, making the consequences harder and more expensive to address later.

As Florida Governor Ron DeSantis recently warned, “Unless artificial intelligence is addressed properly, it could lead to an age of darkness and deceit.” That is not a call to panic, nor an argument against innovation. It is a recognition that power without responsibility has consequences, and that technologies capable of shaping behavior and belief demand more than blind trust. Whether our institutions are willing to confront that reality before the damage is done is the question now hanging in the balance.

When carefully developed and grounded in clear principles, artificial intelligence can help strengthen the core values of American society—such as faith, family, community, and civic duty—by enhancing human decision-making rather than replacing it. However, if AI is created recklessly or solely for speed, profit, or centralized control, it could damage these same institutions, harm human relationships, and concentrate power in ways incompatible with a free society. Mistakes in this process could lead to social decline instead of progress, ironically supporting some goals of authoritarian regimes like the Chinese Communist Party. The United States must lead in this technology while upholding and promoting our American values, rather than quietly eroding them.

Hopefully, after reading this column, you agree that the absence of guardrails as artificial intelligence technologies are developed and deployed is alarming. As a civilized society, we have a responsibility to determine how to establish those guardrails without stifling innovation or ceding leadership to authoritarian competitors. I will continue talking with experts across disciplines as I think through these questions, and I hope you’ll do the same. This is a conversation worth continuing in a future column.

You can grab this on your favorite podcast app here.

Or use the audio player immediately below…

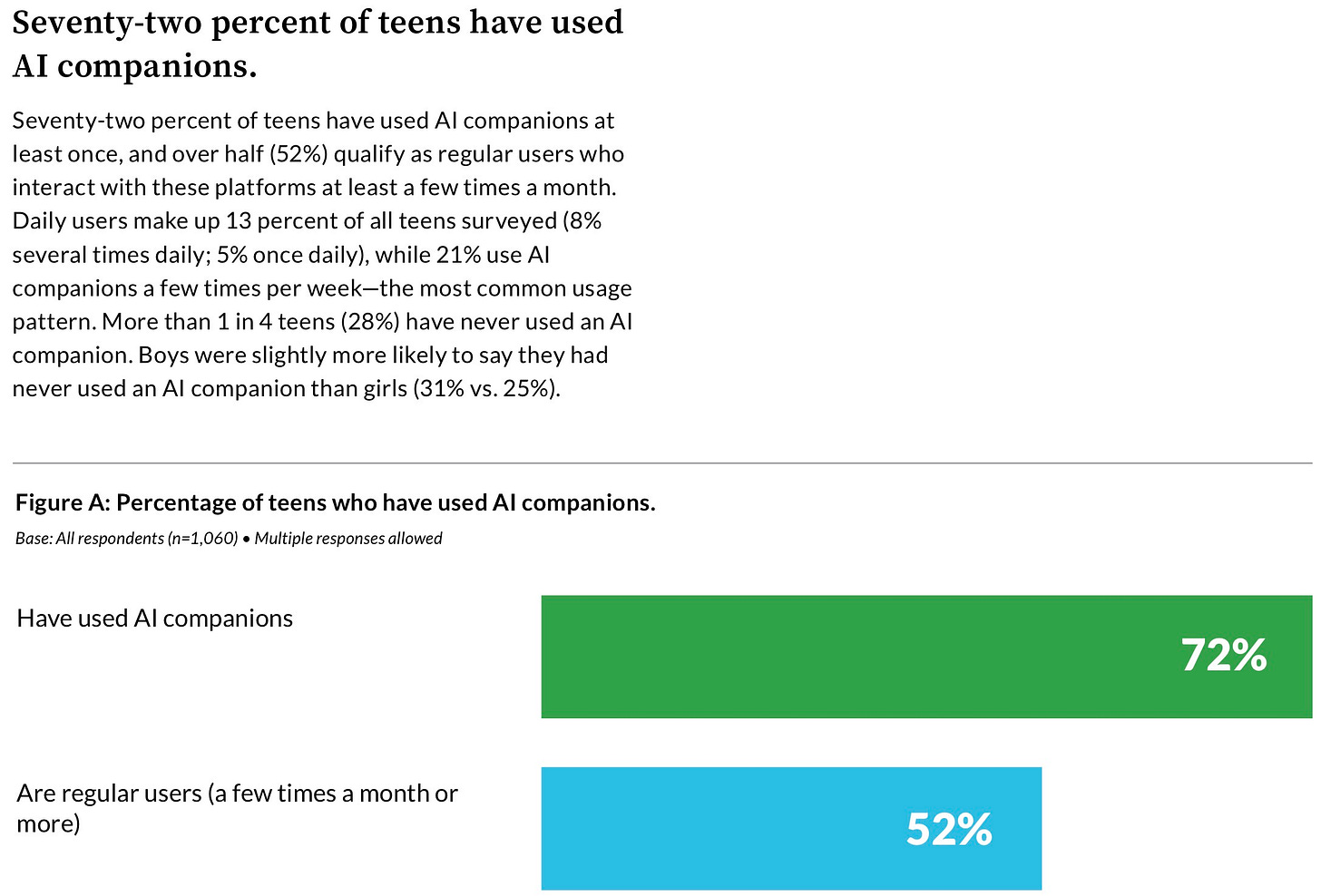

Recommended Reading: The Risks of Unregulated AI on Children

Now that you’ve read this column, I recommend a powerful feature by Caitlin Gibson in The Washington Post titled “Her daughter was unraveling, and she didn’t know why. Then she found the AI chat logs.” The story provides a human-scale look at how children and teenagers are increasingly using AI chatbots as companions, often for deeply personal conversations that parents don’t even know are happening. It vividly illustrates many of the concerns discussed here — emotional attachment, persuasion without accountability, and the absence of meaningful guardrails. It’s essential and unsettling reading. I subscribe to the Post and have provided a gift link here (they may ask for your email, not sure).

Also, a survey conducted by Common Sense Media is mentioned in the story. An informative report on it is available here; below, I have included the most critical data. 72% of American teens have used an AI chatbot (e.g., ChatGPT, Grok, Gemini).